Prompting Large Language Models (LLMs), or providing context on the expected model of operation, is an effective way to steer the outputs of such models to satisfy human desiderata after they have been trained. But in rapidly evolving domains, there is often need to fine-tune LLMs to improve either the kind of knowledge in their memory or their abilities to perform open ended reasoning in new domains. When human’s learn new concepts, we often do so by linking the new material that we are studying to concepts we have already learned before. To that end, we ask, “can prompting help us teach LLMs how to learn”. In this work, we study a novel generalization of instruction tuning, called contextual fine-tuning, to fine-tune LLMs. Our method leverages instructional prompts designed to mimic human cognitive strategies in learning and problem-solving to guide the learning process during training, aiming to improve the model’s interpretation and understanding of domain-specific knowledge. We empirically demonstrate that this simple yet effective modification improves the ability of LLMs to be fine-tuned rapidly on new datasets both within the medical and financial domains.

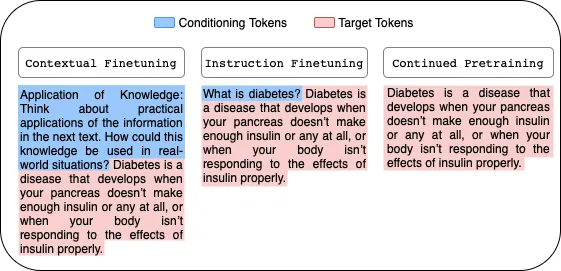

Contextual Fine-Tuning (CFT) is a novel approach that blends in-context learning with gradient-based learning to improve how Large Language Models (LLMs) learn domain-specific knowledge. Unlike traditional continued pre-training or instruction fine-tuning, CFT uses contextual prompts to guide the model’s learning process.

To test domain adaptation in a challenging setting, we curated a biomedical dataset of 121,489 biomedical journal articles (MDPI) and 29 open-source medical textbooks. We release OpenMedText as a resource for domain adaptation research.

We evaluate on both medical and financial tasks to show the effectiveness of CFT.

Medical tasks include subsets of MMLU including Anatomy, Clinical Knowledge, College Biology, College Medicine, Medical Genetics, Professional Medicine, and the MedQA professional exam dataset.

Financial tasks include FiQA sentiment analysis, MultiFin headline classification, and Causal20.

| Model (7B) | Anatomy | Clinical Knowledge | College Biology | College Medicine | Medical Genetics | Professional Medicine | MedQA | Average |

|---|---|---|---|---|---|---|---|---|

| Chat | 44.07 | 46.79 | 48.61 | 39.02 | 49.00 | 48.90 | 38.96 | 45.05 |

| Chat (CPT) | 45.19 | 47.17 | 49.31 | 43.93 | 50.50 | 46.32 | 39.28 | 45.96 |

| Chat (CFT) | 48.15 | 48.87 | 52.08 | 44.22 | 54.00 | 46.69 | 40.65 | 47.81 |

| AdaptLLM | 44.45 | 47.36 | 48.27 | 39.60 | 45.00 | 38.61 | 37.12 | 42.92 |

| Model (13B) | Anatomy | Clinical Knowledge | College Biology | College Medicine | Medical Genetics | Professional Medicine | MedQA | Average |

|---|---|---|---|---|---|---|---|---|

| Chat | 51.85 | 56.60 | 54.17 | 46.82 | 63.50 | 56.99 | 45.33 | 53.61 |

| Chat (CPT) | 50.37 | 60.00 | 55.90 | 50.58 | 62.00 | 57.35 | 43.95 | 54.31 |

| Chat (CFT) | 53.33 | 63.21 | 57.99 | 56.35 | 62.50 | 57.72 | 44.85 | 56.56 |

| Model (7B) | FiQA (F1) | Causal20 (F1) | MultiFin (F1) | Average |

|---|---|---|---|---|

| Chat | 56.40 | 90.40 | 38.74 | 61.48 |

| Chat (CPT) | 62.53 | 90.16 | 38.23 | 63.64 |

| Chat (CFT) | 67.69 | 90.17 | 46.01 | 67.96 |

| Model (13B) | FiQA (F1) | Causal20 (F1) | MultiFin (F1) | Average |

|---|---|---|---|---|

| Chat | 61.18 | 84.77 | 45.81 | 63.92 |

| Chat (CPT) | 66.96 | 90.06 | 45.33 | 67.45 |

| Chat (CFT) | 70.55 | 89.87 | 50.94 | 70.45 |

@inproceedings{

choi2025teaching,

title={Teaching {LLM}s How To Learn with Contextual Fine-Tuning},

author={Younwoo Choi and Muhammad Adil Asif and Ziwen Han and John Willes and Rahul G. Krishnan},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://arxiv.org/abs/2503.09032}

}